Authored by Autumn Spredemann via The Epoch Times,

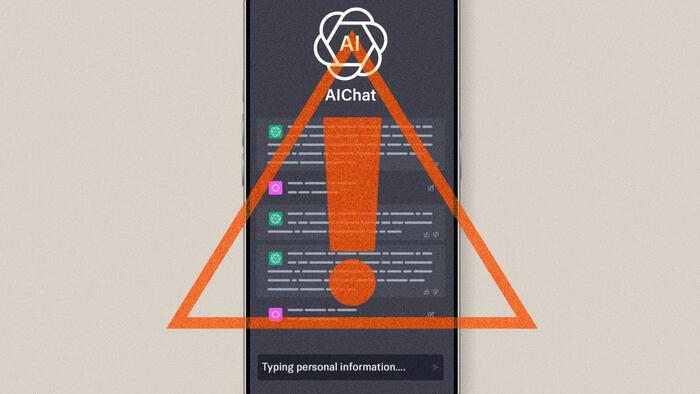

Amid a global rush by companies small and large to adopt artificial intelligence (AI), experts are warning about escalating concerns over data security.

As AI capabilities accelerate, cybersecurity and privacy experts warn that the same models capable of detecting fraud, securing networks, or anonymizing records can also infer identities, reassemble stripped-out details, and expose sensitive information, according to a report by McKinsey & Company released in May.

Ninety percent of companies lack the modernization to defend against AI-driven threats, a 2025 Accenture cybersecurity resilience report stated. The report included insights from 2,286 security and technology executives across 24 industries in 17 countries regarding the state of AI-cyber attack readiness.

So far this year, the Identity Theft Resource Center has confirmed 1,732 data breaches. The center also noted that the “continued rise of AI-powered phishing attacks” is becoming more sophisticated and challenging to detect. Some of the main industries plagued by these data breaches include financial services, health care, and professional services.

“It is the predictable consequence of a technology that has been deployed with breathtaking speed, often outpacing organizational discipline, regulatory provisions, and user vigilance. The fundamental design of most generative AI systems makes this outcome almost inevitable,” Danie Strachan, senior privacy counsel at VeraSafe, told The Epoch Times.

Because AI systems are designed to absorb an immense volume of information for training, this creates vulnerabilities.

“When employees, often with their best intentions, provide AI systems with sensitive business data—be it strategy documents, confidential client information, or internal financial records— that information is absorbed into a system over which the company has little to no control,” Strachan said.

This is especially true if consumer versions of these AI tools are used and protective measures are not enabled.

“It is very much similar to pinning your confidential files on a public noticeboard and hoping no one takes a copy,” Strachan said.

Grok, DeepSeek, and ChatGPT apps are displayed on a smartphone screen in London on Feb. 20, 2025. So far this year, the Identity Theft Resource Center has confirmed 1,732 data breaches. Justin Tallis/AFP via Getty Images

Multifaceted Threat

There are different ways someone’s personal data can end up in the wrong hands or be exposed by AI tools. Hacker-directed attacks and theft are well known, but another way this occurs is through what researchers call “privacy leakage.”

Publicly accessible Large Language Models (LLMs) are a common way for information to end up in a data breach or “leak.”

This year, according to Obsidian Security, a security researcher discovered 143,000 user queries and conversations from popular LLM models—including ChatGPT, Grok, Claude, and Copilot—were available to the public on Archive.org.

“This discovery follows closely on the heels of recent disclosures that ChatGPT queries were being indexed by search engines like Google. Incidents like these continue to raise broader concerns about data leakage and inadvertent exposure of sensitive information through AI platforms,” the Obsidian report stated.

“The highest risk is the inadvertent exposure of sensitive data when team members interact with public-facing AI tools,” Strachan said.

He added it’s not just personal data at risk, but organizational information, given the high volume of people who use LLMs for work tasks.

“With access to multimodal AI, the risk extends to documents, spreadsheets, transcripts, and audio recordings from meetings, and video content. This data, once shared, can be used to train future models, potentially surfacing in responses to queries from other users, including competitors,” Strachan said.

But it’s not just through LLMs that data breaches occur. AI chatbots can access information in nearly every aspect of the digital world.

“I recorded 47 cases of data exposure on gaming platforms in Q3, the vast majority of which were related to AI chatbots that have unlimited access to the backend,” Michael Pedrotti, the co-founder of game server hosting company GhostCap, told The Epoch Times.

OpenAI CEO Sam Altman speaks during the OpenAI DevDay event in San Francisco on Nov. 6, 2023. This year, a security researcher discovered 143,000 user queries and conversations from popular LLM models—including ChatGPT, Grok, Claude, and Copilot—were available to the public on Archive.org. Justin Sullivan/Getty Images

Pedrotti said the mounting pressure to implement AI systems facilitates security “catastrophes.”

“Organizations relate AI directly to customer databases without the existence of adequate isolation layers. The executives who insist on AI implementation in weeks are cutting corners,” he said.

“The outcome is AI systems that have access to everything, including payment cards, personal messages, without oversight or logging policies.”

Pedrotti has spent years working with cybersecurity infrastructure. He said the most hazardous data privacy blind spot is in what he called “vectors embeddings.” These are representations of data, such as words, images, or audio, transformed into numerical “vectors” that allow AI models to process and understand complex information by placing similar data points closer together.

“These mathematical encodings of the text seem anonymous, but the personal data is fully retrievable,” Pedrotti said.

For the moment, Pedrotti said it isn’t possible to delete records in AI models like in traditional databases, because information is permanently embedded.

“I have even uncovered sensitive employee discussions with corporate AI helpers months after they deleted the original messages in Slack. The information is incorporated into the fundamental body of AI,” he said.

“AI’s potential benefits, particularly on the bottom line, are causing organizations to want to jump on the bandwagon quickly,” Parker Pearson, chief strategy officer at Donoma Software, told The Epoch Times.

Pearson said she has seen this pattern with other technology booms.

“The common denominator of each of these ’tech-rushes’ is that important details, usually with a direct security impact, get overlooked or overruled in the moment,” she said.

A man at a laptop in Washington on March 18, 2025. A recent survey of 1,000 U.S. adults revealed 84 percent of internet users have “unsafe” password practices, such as using personal information. Madalina Vasiliu/The Epoch Times

Taking Action

Pearson believes the situation will get worse unless more proactive steps are taken.

At an individual level, she said people need to educate themselves on good cybersecurity practices—strong passwords and multi-factor authentication are common knowledge to some, but evidence suggests the majority of internet users have poor digital practices.

A recent survey by web security company All About Cookies, of 1,000 U.S. adults, revealed 84 percent of internet users have “unsafe” password practices, such as using personal information. In the same group, 50 percent of respondents reported reusing passwords, and 59 percent claimed to share at least one account password with others, such as on a streaming platform or a bank account.

Pearson said it’s time to stop trusting that companies will live up to their data privacy statements.

“Start asking questions before automatically handing over personally identifiable information. Start pushing for accountability,” she said.

“There needs to be consequences far beyond the 12 months of identity monitoring offered by organizations that have been breached. Frankly, the fact that is accepted as remediation is offensive.”

Pearson believes organizations that fail to maintain data trust should pay a higher penalty as their customers will likely be impacted forever.

Most cybersecurity experts agree that smart individual practices are important, but Strachan said that when it comes to AI data privacy, the majority of the accountability lies at the business end.

“The burden of reducing AI risks falls on organizations. They must implement staff awareness programs that train their employees to treat every prompt like an email to the front page of a daily newspaper,” he said.

Strachan believes that to reduce risks, businesses should deploy enterprise AI tools with training disabled, data retention limited, and access tightly controlled. He said AI “explodes” the risk surface of privacy management, which will become more complex as the technology evolves.

People visit an AI data center at SK Networks during the Mobile World Congress, the world’s biggest mobile fair, in Barcelona on March 3, 2025. The United States and the European Union have begun to address data security in the midst of progressive advancement of AI systems. Manaure Quinter/AFP via Getty Images

Legislation is also being outpaced by increasingly advanced AI systems that are currently exploiting privacy grey areas.

“AI businesses are taking advantage of a huge privacy legislation loophole. According to them, model training is not data storage, which means they do not need to be deleted at all,” Pedrotti said.

He has followed cases of companies shifting training operations offshore, specifically to avoid regulatory compliance and deliver the service to the same users.

“The use of cross-jurisdictional models establishes loopholes,” he said.

“The existing laws focus on the data gathering aspect but do not explain how AI works with such data and permanently incorporates it into the algorithms.”

Pearson said some of the current AI-legislation “gaps” vulnerable to exploitation include cross-border information flows, training data, web scraping, algorithmic decision-making, and third-party data sharing.

Strachan echoed the same concerns. “Regulatory delays are creating dangerous ambiguity around liability. … This created grey areas threat actors can potentially exploit, particularly in cross-border scenarios where jurisdictional gaps amplify the problem,” he said.

The United States and the European Union have begun to address data security in the midst of progressively advancing AI systems.

The White House’s 2025 AI Action Plan highlighted the need to boost cybersecurity for critical infrastructure. The report noted that AI systems are susceptible to adversarial inputs such as data poisoning and privacy attacks.

Officials in the EU have taken a similar stance, pointing out that a lack of proper security with LLMs could result in breaches of sensitive or private information used to train AI models.

Loading recommendations…